Bing to publishers: Start using new Bing URL submission process for indexing now

Do you have new or updated content you want Bing to discover faster? Start using the new Bing URL Submission tool

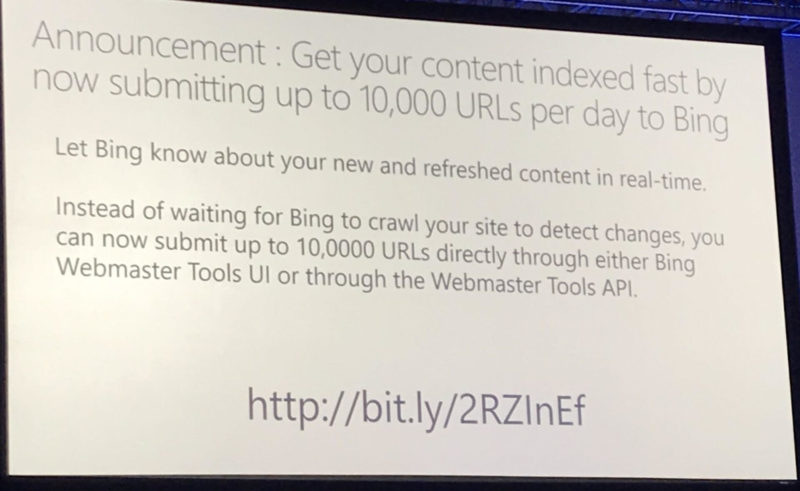

Last week at SMX West, Bing announced it is now allowing webmasters, publishers and site owners to submit 10,000 URLs per day to its search engine for crawling and indexing. It was a 1,000x increase in the previous quota Bing had for this tool. But there is a reason for that increase, Bing said in the announcement “We believe that enabling this change will trigger a fundamental shift in the way that search engines, such as Bing, retrieve and are notified of new and updated content across the web.”

I spoke with Christi Olson, Bing’s head of search evangelism, who stressed that this is the direction Bing is going. If you want your new or updated content to be in the Bing index, you will eventually need to use the Bing URL submission process either through Bing Webmaster Tools or the APIs.

Today, crawling isn’t changing. For now, Bing will continue to crawl at the scale it is crawling now. It will not reduce any resources put into crawling the web at this time. But ultimately, Bing wants to reduce the effort and resources put into crawling the web.

Bing wrote in that announcement “eventually search engines can reduce crawling frequency of sites to detect changes and refresh the indexed content.”

Strong crawl signal. If you use the URL submission tool today, it is a “strong” signal that Bing needs to crawl that URL right now, Christi Olson said. Bing will go out of its way to shift crawl resources around to crawl the URL you submitted via the URL submission tool.

The future. Bing explained to us that in a few years the hope is to not rely on crawling the web to find new or updated content. Instead, webmasters, SEOs, publishers will use Bing’s tool or their own content management platforms will submit content automatically via the API.

When will this happen exactly? That is unclear, there is no specific deadline at this time.

Impact on links. The value Bing places on links will not change as a result of this. This is a crawling and indexing procedure, not a ranking procedure.

Ultimately, if Bing is not finding new URLs or updated links within fresh new pages, that may impact its link graph. But Olson said that even if you submit a new page to Bing via the URL submission tool and there are new pages linked to from that page, Bing will crawl those new pages.

For example, if you submit domain.com/abc.html as a new page, and that page links to domain.com/def.html, Bing will also crawl /def.html if it is new to the index. However, if /def.html was already in Bing’s index, then it will not recrawl it because the URL wasn’t submitted as updated.

Spam. Bing does expect some spammers to try to abuse the tool. That is why there are some quotas in place, such as site verified age in Bing Webmaster tool, to determine how much content you can submit based on that age factor. Plus, if you continue to submit old content to Bing or spammy content, I suspect that might impact your quotas or even get you penalized.

Here are those quotas:

Use it now. There is really no reason why webmasters should not use the tool. Especially sites that have new content being produced daily or are constantly updating older pages of content with fresh content. Olson says news sites and sites that have new and updated content are recommended to start using this feature today.

Use it now. There is really no reason why webmasters should not use the tool. Especially sites that have new content being produced daily or are constantly updating older pages of content with fresh content. Olson says news sites and sites that have new and updated content are recommended to start using this feature today.

Yoast SEO announced it has integrated with this new API. So WordPress sites that use the Yoast SEO plugin will see the crawl benefit from this almost immediately.

Why it matters. This is a fundamental shift in how search engines discover, crawl and index new and update content. If Google also adopts this method, it will change how SEOs, publishers, webmasters produce and share content going forward. If you are producing new content or updating old content and want Bing to find it sooner, start using this feature today.